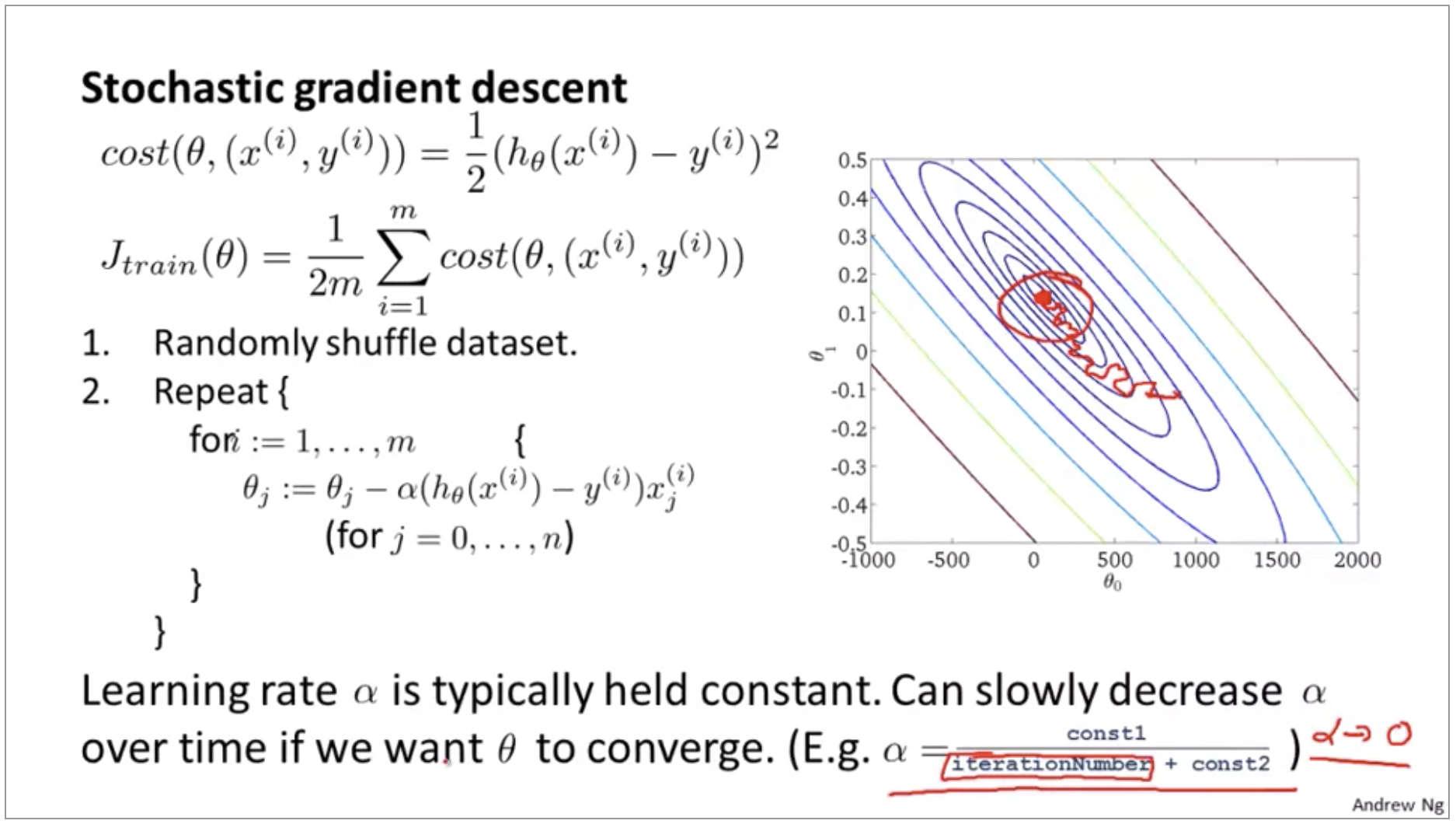

I hope this article was helpful in getting the hang of the algorithm. Mini-batch tries to strike a balance between the goodness of gradient descent and speed of SGD. It is also common to sample a small number of data points instead of just one point at each step and that is called “mini-batch” gradient descent. SGD randomly picks one data point from the whole data set at each iteration to reduce the computations enormously. Yes, you might have guessed it right !! It is while selecting data points at each step to calculate the derivatives. Where can we potentially induce randomness in our gradient descent algorithm? Stochastic gradient descent comes to our rescue !! “Stochastic”, in plain terms means “random”. That is pretty much an overhead and hence gradient descent is slow on huge data. It is common to take 1000 iterations, in effect we have 100,000 * 1000 = 100000000 computations to complete the algorithm. We need to compute the derivative of this function with respect to each of the features, so in effect we will be doing 10000 * 10 = 100,000 computations per iteration. The sum of squared residuals consists of as many terms as there are data points, so 10000 terms in our case. Say we have 10,000 data points and 10 features. We need to take a closer look at the amount of computation we make for each iteration of the algorithm. There are a few downsides of the gradient descent algorithm. It can also be mathematically shown that gradient descent algorithm takes larger steps down the slope if the starting point is high above and takes baby steps as it reaches closer to the destination to be careful not to miss it and also be quick enough. So, it is always good to stick to low learning rate such as 0.01. Larger learning rates make the algorithm take huge steps down the slope and it might jump across the minimum point thereby missing it. The “learning rate” mentioned above is a flexible parameter which heavily influences the convergence of the algorithm. Repeat steps 3 to 5 until gradient is almost 0.Calculate the new parameters as : new params = old params - step size.Calculate the step sizes for each feature as : step size = gradient * learning rate.

STOCHASTIC GRADIENT DESCENT UPDATE

Update the gradient function by plugging in the parameter values.If we had more features like x1, x2 etc., we take the partial derivative of “y” with respect to each of the features.) (To clarify, in the parabola example, differentiate “y” with respect to “x”. Pick a random initial value for the parameters.In other words, compute the gradient of the function. Find the slope of the objective function with respect to each parameter/feature.The general idea is to start with a random point (in our parabola example start with a random “x”) and find a way to update this point with each iteration such that we descend the slope. In the case of linear regression, you can mentally map the sum of squared residuals as the function “y” and the weight vector as “x” in the parabola above. This algorithm is useful in cases where the optimal points cannot be found by equating the slope of the function to 0. “Gradient descent is an iterative algorithm, that starts from a random point on a function and travels down its slope in steps until it reaches the lowest point of that function.” The same problem can be solved by gradient descent technique. By using this technique, we solved the linear regression problem and learnt the weight vector. We know that a function reaches its minimum value when the slope is equal to 0. The objective of regression, as we recall from this article, is to minimize the sum of squared residuals. I use linear regression problem to explain gradient descent algorithm. It is important to understand the above before proceeding further. “y” here is termed as the objective function that the gradient descent algorithm operates upon, to descend to the lowest point. The objective of gradient descent algorithm is to find the value of “x” such that “y” is minimum. In the above graph, the lowest point on the parabola occurs at x = 1.

0 kommentar(er)

0 kommentar(er)